Assigning Independent Proxy Tunnels to Each Container for TCP+UDP Transparent Forwarding

I recently had a requirement to assign different egress IP addresses to each container in a one-to-one mapping. However, these egress IPs are distributed across multiple remote machines and require connecting through different proxies, while the local machine only has a single unified egress IP for external network access.

The simplest approach would be to configure each program inside every container to use its corresponding proxy individually. But besides being too tightly coupled and difficult to manage, more importantly, the proxy information would be exposed inside the container. I prefer implementing transparent proxy, where the entire container environment including DNS and programs access the network through proxy tunnels to reach remote machine egress IPs. The container doesn’t know the proxy exists and thinks its network egress is the remote machine’s egress IP.

To build this system, I used V2Fly’s dokodemo-door protocol and the kernel netfilter’s REDIRECT and TPROXY capabilities.

According to V2Fly’s official documentation, Dokodemo door is an inbound protocol that can listen on a local port and forward all incoming data to a designated proxy server. Combined with V2Fly’s routing capabilities, I can configure policies to route different source IPs to different proxy tunnels. This way, as long as I assign different IPs to different containers, containers will automatically use their corresponding proxy tunnels, achieving one-to-one or many-to-one relationships. And it only requires running a single V2Fly instance, not multiple ones.

Step 1: Create Docker Network and Start Containers

First, create a bridge network for containers to use. The bridge main interface gets assigned 10.8.0.1, and my containers are assigned starting from 10.8.0.10. I added the internal flag to prevent automatic Masquerade and related netfilter rules - I’ll configure those myself later. I also configured containers to use Google and Cloudflare’s public DNS, otherwise by default they would query the Docker daemon’s local DNS server, which then fetches records from the host’s configured DNS servers - effectively accessing the local ISP’s DNS, and the DNS traffic wouldn’t go through the proxy tunnel. After specifying DNS, containers will directly query the specified DNS servers instead of going through Docker’s DNS server.

docker network create --internal --subnet=10.8.0.0/24 mynetwork

docker run -id --name mycontainer0 --network mynetwork --ip 10.8.0.10 --dns 8.8.8.8 --dns 1.1.1.1 alpine

docker run -id --name mycontainer1 --network mynetwork --ip 10.8.0.11 --dns 8.8.8.8 --dns 1.1.1.1 alpine

docker run -id --name mycontainer2 --network mynetwork --ip 10.8.0.12 --dns 8.8.8.8 --dns 1.1.1.1 alpine

Step 2: Configure V2Fly

Configure dokodemo-door in V2Fly inbounds to receive traffic from containers, accepting both TCP and UDP. Configure multiple proxy tunnels in outbounds, connecting to multiple remote machines. Then configure routing policies to specify which proxy tunnel each container should use.

"inbounds": [

{

"port": 10900,

"listen": "10.8.0.1",

"protocol": "dokodemo-door",

"settings": {

"network": "tcp,udp",

"followRedirect": true

}

}

],

"outbounds": [

// Connect to remote machines: hk0, hk1, hk2

],

"routing": {

"rules": [

{

"type": "field",

"outboundTag": "hk0",

"source": ["10.8.0.10"]

},

{

"type": "field",

"outboundTag": "hk1",

"source": ["10.8.0.11"]

},

{

"type": "field",

"outboundTag": "hk2",

"source": ["10.8.0.12"]

}

]

}

Step 3: Network Forwarding

This is also the core part - I need to forward packets sent by containers to port 10900 on 10.8.0.1 for V2Fly.

TCP

First, handle TCP redirecting by adding redirect rules via iptables in the host network namespace.

iptables -t nat -N MYPROXY

iptables -t nat -A MYPROXY -d 0.0.0.0/8 -j RETURN

iptables -t nat -A MYPROXY -d 10.0.0.0/8 -j RETURN

iptables -t nat -A MYPROXY -d 127.0.0.0/8 -j RETURN

iptables -t nat -A MYPROXY -d 169.254.0.0/16 -j RETURN

iptables -t nat -A MYPROXY -d 172.16.0.0/12 -j RETURN

iptables -t nat -A MYPROXY -d 192.168.0.0/16 -j RETURN

iptables -t nat -A MYPROXY -d 224.0.0.0/4 -j RETURN

iptables -t nat -A MYPROXY -d 240.0.0.0/4 -j RETURN

iptables -t nat -A MYPROXY -p tcp -j REDIRECT --to-ports 10900

iptables -t nat -A PREROUTING -p tcp -s 10.8.0.0/24 -j MYPROXY

The above creates a MYPROXY rule chain that forwards all packets except those destined for bogon IPs to port 10900. Without specifying an IP, it defaults to the source interface’s primary IP, which is 10.8.0.1. Then packets from 10.8.0.0/24 are applied to this rule chain. This effectively forwards all TCP traffic from containers to 10.8.0.1:10900 for V2Fly.

Detailed packet path:

- A program inside container 10.8.0.12 sends a TCP packet, assume the destination is 1.2.3.4:12345. According to the routing table

default via 10.8.0.1 dev eth0, it queries ARP for the MAC address corresponding to 10.8.0.1. The packet’s destination MAC becomes the bridge main interface’s MAC address. - The TCP packet leaves the container namespace’s main interface eth0, which is essentially one end of a veth pair. The packet is received on the other end (the host namespace’s veth). Since the host-side veth has joined the bridge and registered the bridge’s rx_handler, upon receiving the packet, the veth enters br_handle_frame(). The function finds that the packet’s destination MAC is the bridge main interface, so it enters the host’s network stack for processing.

- After entering the network stack, it goes through netfilter processing, including the nat table’s PREROUTING chain (executed before routing). At this point, the source IP matches the MYPROXY rule chain (packet source IP is 10.8.0.12, destination IP is 1.2.3.4:12345), and

-j REDIRECT --to-ports 10900is executed. The packet’s destination IP is changed to 10.8.0.1:10900, and the original information is recorded in conntrack. - During routing, it finds that 10.8.0.1 is a local IP, then locates the socket listening on this address and port - V2Fly’s inbound.

- The packet enters V2Fly for processing. V2Fly calls getsockopt(…SO_ORIGINAL_DST…) to find the packet’s original destination IP and port 1.2.3.4:12345.

- V2Fly requests the destination IP and port through the proxy tunnel.

- After the destination server processes and returns a TCP response packet to V2Fly, V2Fly replies to 10.8.0.12. During POSTROUTING, SNAT changes the source IP from 10.8.0.1 back to the destination server’s IP and port 1.2.3.4:12345.

- The packet passes through the bridge and veth forwarding, returning to the program’s socket inside the container. The program believes it’s communicating directly with the destination server, achieving transparent proxy.

UDP

Next, handle UDP forwarding. UDP is stateless, making it more troublesome to track, and unlike TCP, I can’t use SO_ORIGINAL_DST to get original destination information - I need IP_RECVORIGDSTADDR instead. Conntrack stability for long UDP connections is also not great, since UDP’s default timeout is 30s (no reply received) and 120s (reply received), long connections easily encounter timeouts.

According to the V2Fly official documentation

, redirect mode supports both TCP and UDP, so theoretically UDP could reuse our REDIRECT setup above (adding iptables -t nat -A MYPROXY -p udp -j REDIRECT --to-ports 10900). However, the dokodemo-door official documentation

example separates TCP using REDIRECT while UDP uses TPROXY. So following the official recommendation, we’ll use TPROXY for UDP forwarding instead of bothering with REDIRECT.

Add forwarding rules via iptables in the main network namespace, and add a routing table.

ip route add local default dev lo table 10900

ip rule add fwmark 10900 lookup 10900

iptables -t mangle -A PREROUTING -s 10.8.0.0/24 -p udp -j TPROXY --on-port 10900 --on-ip 10.8.0.1 --tproxy-mark 10900/10900

The above adds a TPROXY rule in the mangle table that forwards all UDP packets from 10.8.0.0/24 (UDP traffic from containers) to the socket listening on 10.8.0.1:10900, adds the 10900 mark, and routes it to local delivery for V2Fly to receive. The netfilter prerouting execution order is raw table, then mangle table, then nat table. Since TPROXY needs to run before NAT, it must be configured in the mangle table, not the nat table.

Detailed packet path:

- A UDP packet is sent from inside a container with destination 1.2.3.4:12345. The first two steps are the same as TCP above. Then at the mangle table’s PREROUTING chain, the source IP matches so TPROXY rule executes. TPROXY finds the local socket listening on 10.8.0.1:10900 with IP_TRANSPARENT flag, then assigns this socket to the packet’s skb->sk (= V2Fly’s inbound listening socket), and sets skb->mark to 10900. The difference from TCP processing is that the packet’s destination IP and port remain unchanged at 1.2.3.4:12345.

- During routing, the packet has a 10900 mark. Due to the ip rule configuration, it uses table 10900. (The mark number set by tproxy and the route table number are independent and can be set to any value separately - they don’t need to match. Here they’re set the same just for clarity.)

- The packet’s destination IP is 1.2.3.4. According to table 10900’s routing rule

local default dev lo, all IPs are considered local, so it enters local delivery processing. - Since netfilter’s TPROXY pre-set skb->sk to V2Fly’s inbound listening socket, during local delivery processing inet_steal_sock() directly delivers the packet to this socket (successfully reaching V2Fly), skipping the socket table lookup for a socket listening on the packet’s destination IP+port.

- The packet enters V2Fly for processing. At this point, the destination IP and port in the packet’s IP header 1.2.3.4:12345 are the actual destination. V2Fly requests the destination through the proxy tunnel.

- After the destination server processes and returns a UDP response packet to V2Fly, V2Fly replies to the container. Note this differs from TCP processing - TCP uses POSTROUTING SNAT to change the packet source IP back to the remote server’s IP and port, while for UDP, V2Fly directly spoofs the packet’s source IP and port to the server’s IP and port (sockets with IP_TRANSPARENT enabled support source IP spoofing), so SNAT isn’t needed.

Step 4: Testing and Solving the UDP NoPorts Problem

During actual testing, TCP traffic from containers worked smoothly, but UDP couldn’t be sent. After enabling V2Fly’s debug logs, I found that V2Fly wasn’t receiving UDP packets sent by containers.

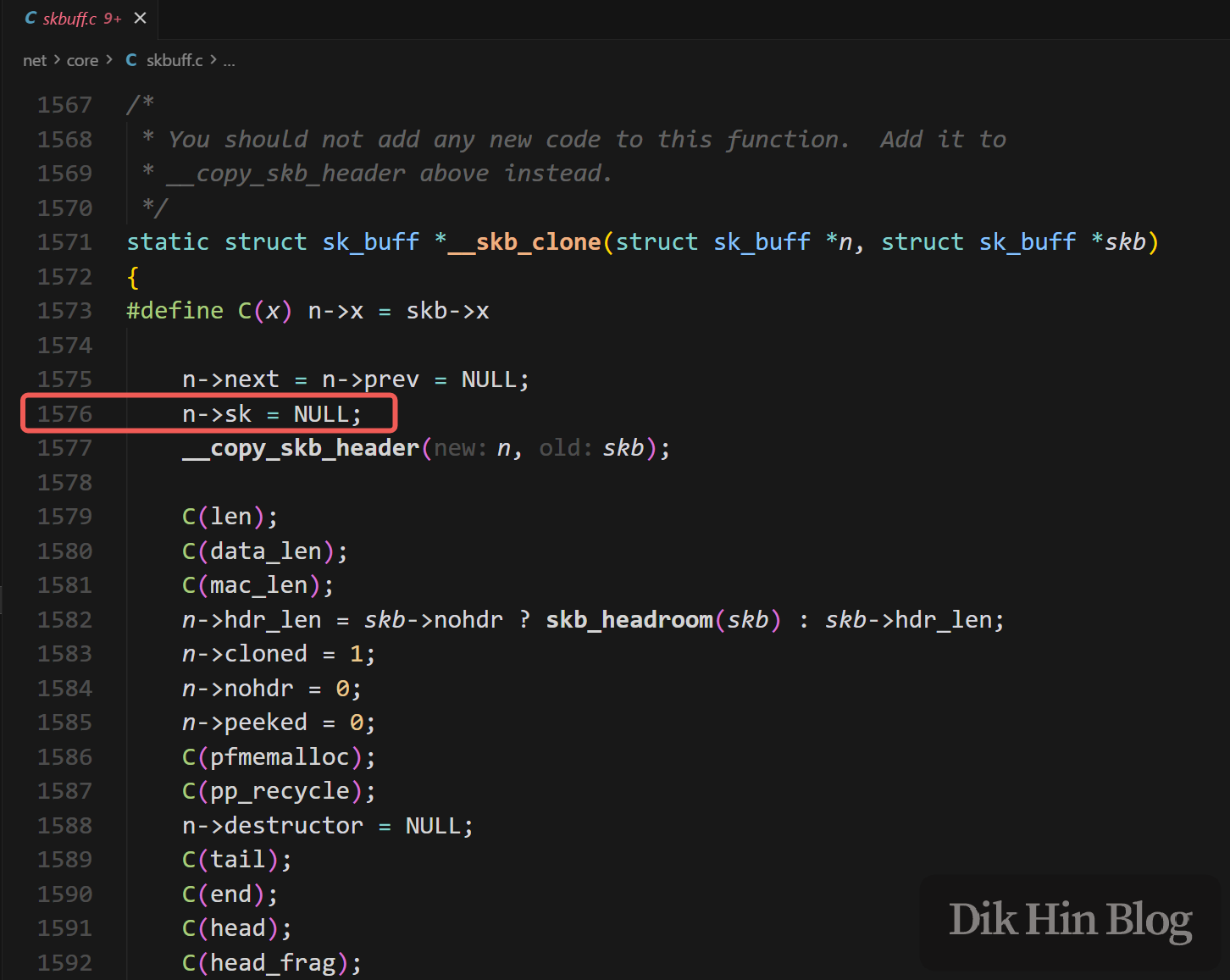

After days of troubleshooting and reading kernel source code, I discovered that the Bridge’s internal L2 layer calls netfilter to process Prerouting first, specifically in the br_nf_pre_routing() function, during which TPROXY sets the packet’s skb->sk. After the bridge’s prerouting flow ends, it calls netif_receive_skb() to re-enter the IP layer for processing. When entering the IP layer, ip_rcv_core() calls skb_share_check(), which finds the skb’s reference count is greater than 1, then triggers skb_clone() to clone the packet. The cloning process clears skb->sk, causing the value pre-set by TPROXY to be lost - specifically at line 1576 in skbuff.c (based on kernel 6.12.49).

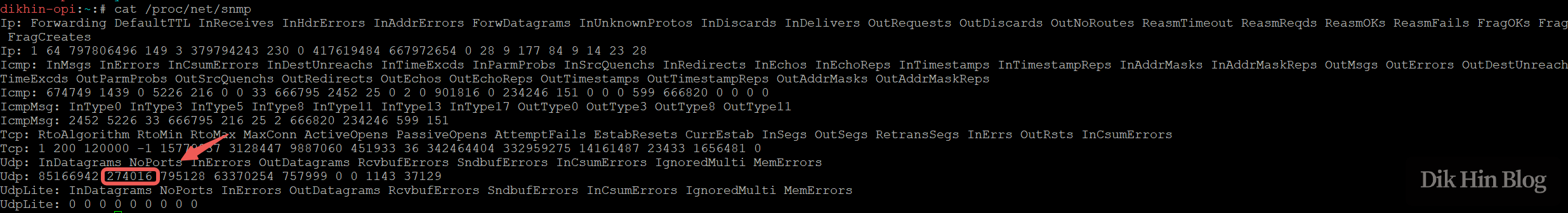

During IP layer processing, since the bridge already ran prerouting before, this time it gets filtered out by ip_sabotage_in() and won’t run prerouting again (= won’t run the TPROXY rule to set the socket). This causes the packet during local delivery processing after routing, since no owning socket was pre-set, to require a lookup in the local socket table for a socket listening on the packet’s destination IP+port. Obviously, no local socket is listening on 1.2.3.4:12345 (V2Fly listens on 10.8.0.1:10900), so no corresponding socket is found, and the UDP packet is ultimately dropped with a NoPorts error.

I can see network statistics via /proc/net/snmp, showing the UDP NoPorts count continuously increasing:

The solution is to disable the Bridge’s own netfilter, letting packets reach the IP layer directly for processing, running the complete prerouting flow at the IP layer including applying TPROXY rules:

sysctl -w net.bridge.bridge-nf-call-iptables=0

After this configuration, I ran tests for several days. Both TCP and UDP worked perfectly, successfully implementing independent transparent proxy tunnels for each container.

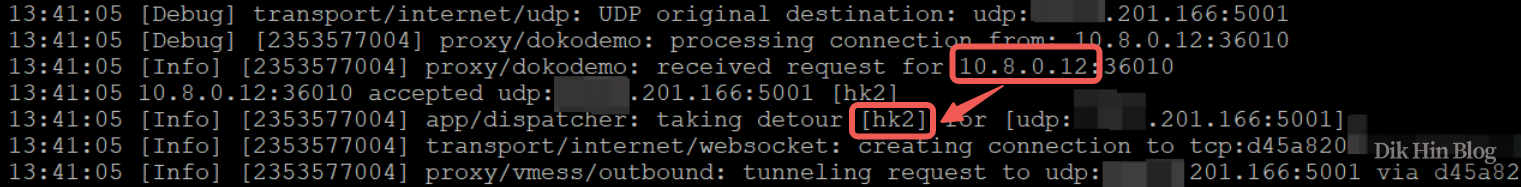

Running logs below show container 10.8.0.12 sending UDP packets to x.x.201.166, reaching V2Fly and routing to the hk2 proxy tunnel: